Meloncillo

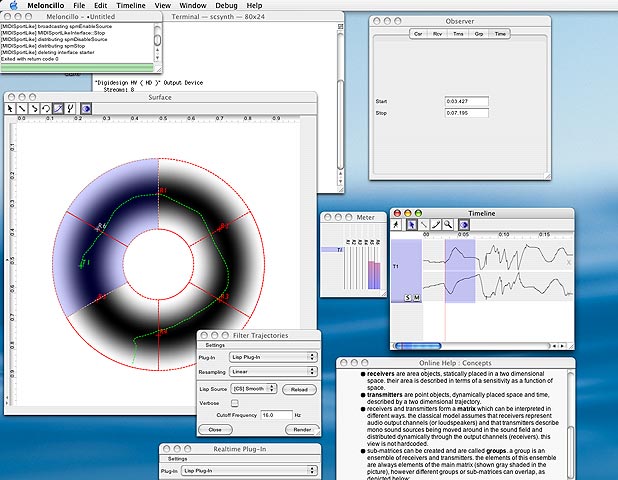

Meloncillo was my M.A. project for graduating from the Electronic Studio of the TU Berlin. It is a software for composing spatialisations. It does not imply a particular model of spatialisation by itself, but exposes a programmable interface to implement different models. The idea is to provide two types of objects in a graphical interface: 'Receivers' are static areas associated with intensities, very much like heat maps, and 'transmitters' are dynamically moving point like objects. Their trajectories can be recorded in real time or edited offline on a timeline view. The spatialisation algorithms give meaning to the matrix of transmitters and receivers. In the following screenshot you can see six receivers aligned in a circle, as well as one transmitter, shown both in the timeline frame on the right side, as well as a detail of 2D trajectory in the surface view on the left:

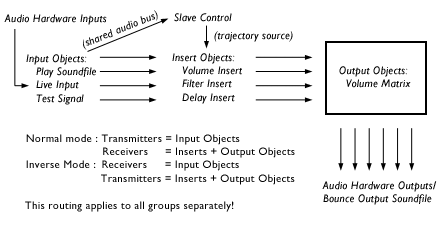

Algorithms included with the distribution are Vector-Based-Amplitude-Panning, both in the 'normal' forward way which sees the receivers as loudspeaker outputs and the transmitters as moving sound objects to be distributed, and also in an 'inverse' way; binaural convolution spatialiation, using the HRTF library from the IRCAM LISTEN project; Ambisonics using the IRCAM spat~ object. For instance, the forward VBAP can be illustrated in the following signal flow:

The actual sound output is done by coupling Meloncillo with an OSC aware synthesiser, such as SuperCollider or Max/PD. For offline rendering and trajectory transformations, also CSound can be used.

Further Resources

The project includes some tutorial documentation. If you are interested and capable of reading German, you may also receive the PDF of the thesis, which contains more details of the reasoning, and the programmable interfaces.